|

Getting your Trinity Audio player ready...

|

The line between the physical and digital worlds is eroding faster than ever, and at the nexus of that change is 3D modeling for AR and VR. AR/VR revolutionizes the familiar modalities of learning, working, and experiencing reality itself that go beyond lifelike simulations in training the surgeon to trying on sneakers virtually in a store. The power of these immersive environments comes from one really crucial aspect—the 3D modeling in populating them.

So what exactly is this 3D modeling for AR/VR?

It’s the creation, formation, and optimization of digital objects that will blend seamlessly in augmented and virtual environments. These are the visual bases of the interactive worlds—from hyperrealistic human avatars to really complex architectural spaces. Unlike usual 3D graphics, an AR/VR model needs to balance realism and performance to be responsive to real-time rendering and different device constraints without losing too much of its visual fidelity.

In this guide, you learn step-by-step how to create, optimize, and integrate 3D models for immersive AR and VR experiences. These principles would range from Blender to Unity and Unreal Engine, plus every emerging trend from AI-assisted 3D modeling to real-time rendering. Whether you are an artist, developer, or brand strategist, mastering these techniques will allow you to produce immersive apps that feel smooth, real, and emotionally involving.

Ready to move from flat screens to fully immersive worlds with Esferasoft?

Let’s dive into the art and science of 3D modeling for AR/VR – where creativity meets technology.

Understanding 3D Modeling in AR and VR

What Is 3D Modeling for AR/VR?

In the process of designing 3D digital objects for AR and VR, these objects exist within a three-dimensional space and interact with users in an immersive environment. These models can either represent a character, building, or an entire world and hence form the basis of augmented human life and virtual reality. The difference is, in 3D models for virtual reality, a lifelike environment is created where everything from the textures to the lighting feels real and reacts accordingly, while in 3D modeling for augmented reality, these digital models sit in the real world via smartphones, virtual and augmented headsets, or smart glasses, thus combining the real and digital into a single experience in real time.

Conversely, what makes 3D modeling for AR and VR unique is that it has a fine line to draw between visual realism and technical efficiency. Every polygon, vertex, and material is going to add to the processing load, so the creators, while maintaining their visual quality, are in a permanent optimization process. The final mixed bag is all artistry, spatial design, and computational accuracy in making interactive reality out of imagination.

Difference Between AR and VR 3D Models

Despite both employing 3D assets, the principles of modeling in AR and VR are different.

- AR Models are developed to integrate the real world. It needs to be smaller in size, fast loading, and accurate with real-world scale to appear realistic when viewed through a camera or a headset. Real-time lighting and shadows help blend these assets into the surroundings.

- VR Models, though, are entirely virtual. They place a focus on depth, realism, and interactivity with increased polygons, good-quality detailed textures, and complex shader programs. Yet, since these are looked at from a very close vantage point, in 360°, performance optimization remains of importance—even any small delay can break the immersion.

Thus, while it has to be agile with AR, it has to be in-depth with VR. One must know this distinction for developers to develop models that best utilize each medium’s strengths.

Why High-Quality Models Drive Immersion

Immersion and fluidity define the success of any AR or VR application. Subtle visual discrepancies—textures misaligned, lighting unrealistic, or animations looking stiff—break the illusion. This is where high-quality 3D models come in to hold the user in an immersive focus where visual detail agrees with the physical expectation.

Polished assets do not just add realism; they increase engagement, usability, and retention. A training simulation feels more natural, a product demo feels more credible, and a VR game feels filled with life. When visuals flow seamlessly with motion and space, it’s less about the user viewing and more about the user experiencing.

Adoption of AR/VR and 3D Modeling in Industries

Across industries, 3D modeling for AR and VR has matured from an experimental and conceptual subject into a powerful stimulator of innovation, learning, and even customer participation. Companies have begun incorporating immersive technologies into the visualization of their ideas, making it even more humanised and intuitive in interacting.

Gaming & Entertainment

The gaming industry first emerged with the introduction of 3D models for virtual reality gaming, where player engagement is key to success. These are titles created in engines such as Unity or Unreal, using high-fidelity models, dynamic lighting, and real-time rendering to immerse players into alternate worlds. Beyond the games, entertainment is utilizing AR filters and VR concerts to create experiences of shared digital moments that are performance and storytelling intertwined with interaction.

E-commerce & Virtual Try-Ons

The interactive shopping experience gained due to AR has changed online shopping forever. The retailer uses the 3D assets to simulate the customers trying on shoes, arranging furniture, or even looking at the internal part of the car. For example, IKEA has an AR app that allows users to place 3D furniture models at the real scale into their rooms to enhance their confidence in the purchase, leading to a lower return rate. This combination of realism and easy access goes on to show what AR 3D modeling adds to decision-making.

Healthcare & Medical Training

These days in the field of medicine, AR/VR 3D model systems are enhancing accuracy and learning. Performances by doctors are unleashed with realistic anatomy in a virtual environment to rehearse operations, while students in medicine learn through interactive simulations without seeing static diagrams. Training becomes safer, faster, and far more economical by utilizing organs or bone structures in 3D visualization.

Education & Interactive Learning

Schools and universities apply this technology to transform conventional lessons into immersive experiences. Students can travel through the solar system with VR or witness chemical reactions with AR. The beauty of real-time rendering together with 3D assets creates tangible experiences of abstract subjects, raises engagement, and strengthens retention.

Real Estate & Architecture Visualization

For architects and developers, just as for most 3D clients, the virtual walkthroughs by 3D models give an actual sense of scale, lighting, and even aesthetics of design before construction even begins. Buyers can experience in real time how the interior looks, the materials, and the landscaping through VR tours or AR overlays, resulting in fast approvals and more confident investments.

Core Principles of AR/VR 3D Modeling

There must be sufficient engineering principles behind the realistic and interactive 3D models in AR and VR, beyond being just artworks, to make it precise and optimized along with knowing how immersion systems manipulate visual data. In essence, it doesn’t matter if it is for a simulation in medicine or virtual showrooms or for gaming environments; these principles will make sure all created assets look superb and work smoothly in real time.

Polygon Count & Model Optimization

Performance is paramount in AR/VR—the 3D model, whatever its surface, is made of polygons—the small geometric units that define shape and detail. The higher the polygon count, the more realistic the model becomes, but it also becomes heavier to render. Optimization is an important point while trying to balance visual fidelity and keeping performance smooth across devices.

Most of the developers use LOD techniques, where the models automatically shift between high- and low-resolution versions according to their distance from the user.

Scale & Real-World Accuracy

Scale accuracy is another vital element for attaining realistic immersion: an AR object deviating too much in size from its real-world counterpart or a VR space feeling wrongly sized immediately displaces the user from the sensation of presence. This is also the reason why designers adhere to real-world measurement standards while constructing the assets—a 1-meter table in real life must be mirrored exactly in the 3D scene.

In AR, improper scaling can result in the alteration of actions that involve physical space, whereas in VR, it can only affect the perception and navigation of users. This ensures natural movement and constant depth to engage users more intuitively with the virtual environment.

Lighting, Shading & Material Realism

The role of lighting is to inject life into every model. Realistic illumination allows an object to merge with its environment, be it virtual or augmented, while realistic shadows assist. Materials based on the principles of physics would thus reproduce the response of surfaces to light—metal reflects, fabric diffracts, glass refracts, etc.—adding depth and believability to what one sees.

Proper baking of lightmaps, dynamic reflections, and global illumination techniques will ensure visual continuity across scenes. The scene-created light not only makes for an appealing scene; it creates the experience’s emotive tone and realism.

Texture Mapping & UV Best Practices

Adding surface detail with textures includes things that are not possible to achieve with polygons alone. Using UV mapping, artists can assign textures to particular areas of a model, as if wrapping a 2D image around a 3D form. Great UV layouts minimize distortion and make things much more efficient when it comes to editing.

Textures used in AR/VR are generally high quality but optimized for speed, mostly compressed as PNG or WebP. The use of normal, roughness, and ambient occlusion maps has improved how small details react to light changes, making things look realistic without needing more polygons.

It doesn’t matter if sculpting just a character or creating an entire world, every outstanding immersive experience starts with these principles. It is the optimization, scale, and realism that determine how well users connect with it.

AR/VR 3D Modeling Software and Tools

The appropriate software is quite capable of turning an idea into an immersive experience. Depending on whether you model for AR or VR applications, texture assets, or create entire virtual environments, your software choice will define your workflow and the quality of your final product. Each software platform offers different levels of control, automation, and performance; knowing which works best for your project is key.

Blender

In the eyes of the AR/VR creator, Blender AR VR modeling is one of the most amazing potentials due to its versatility and open-source nature. It features modeling, sculpting, rigging, animation, and, indeed, even basic game integration in one environment. Real-time viewing is made possible by its Eevee and Cycles render engines, and its active developer community tirelessly updates it with ever more features useful for AR/VR pipelines.

For independent developers and small studios, Blender offers high-quality workflows comparable to those of industry leaders, all without any license fees. Besides, AR VR 3D asset creation on a budget is a breeze due to its support for the leading engines—Unity and Unreal.

Autodesk Maya & 3Ds Max

Autodesk’s tools still hold the industry benchmark for cinematic-level modeling and animation. Maya specializes in advanced rigging, dynamic simulations, and realistic character animation—all critical for a VR experience that requires the import of body language and inflection. 3ds Max, on the other hand, is a favorite where architectural visualization and product design are concerned, mainly due to its robust modifier stacks and accurate modeling tools.

Both platforms integrate into AR/VR workflows seamlessly, as they are able to export in FBX and OBJ formats, thus providing an effortless transition from modeling to engine deployment. Their principal advantage is down to control—every curve, vertex, and texture can be tuned and refined to perfection.

Unity and Unreal Engine

Once the models have been created, they need to be placed into a home, and that’s where Unity and Unreal Engine come in. These engines are responsible for real-time rendering in AR/VR, lighting simulations, user interactions, and physics.

Unreal Engine, powered by Nanite and Lumen, provides the best visual fidelity for enterprise-level VR or cinematic splendor.

Both engines then allow creators to test and deploy over various platforms such as Meta Quest, HoloLens, and smartphones with minimum rework.

Sculpting with ZBrush

If detail is your main concern, then ZBrush will be your mainstay. Specifically built for digital sculpting, it helps artists to create intricate, organic surfaces like human faces, creatures, and refined props. The high-poly sculpts can then be retopologized and optimized for AR/VR environments. The seamless sculpting-to-game-readiness pipeline is aided by Dynamesh and ZRemesher tools. It combines artistry and precision beautifully, pouring life into all those little details that make immersion possible.

Texturing with Adobe Substance Painter

Textures and materials most times determine how “real” a virtual object looks. Adobe Substance Painter simplifies this task by supporting physically based rendering (PBR) texturing with real-time visual feedback. There are supports for smart materials too, which naturally adjust to lighting, thereby saving time and preserving the integrity of our assets.

The combination of Substance Painter with Substance Designer will allow the artist to create custom material libraries—which in turn will keep the environment coherent and up to professional standards.

Software is all well and good; however, the real story is in how you get that vision sculpted into a masterpiece.

Step-by-Step Process of Creating 3D Models for AR/VR

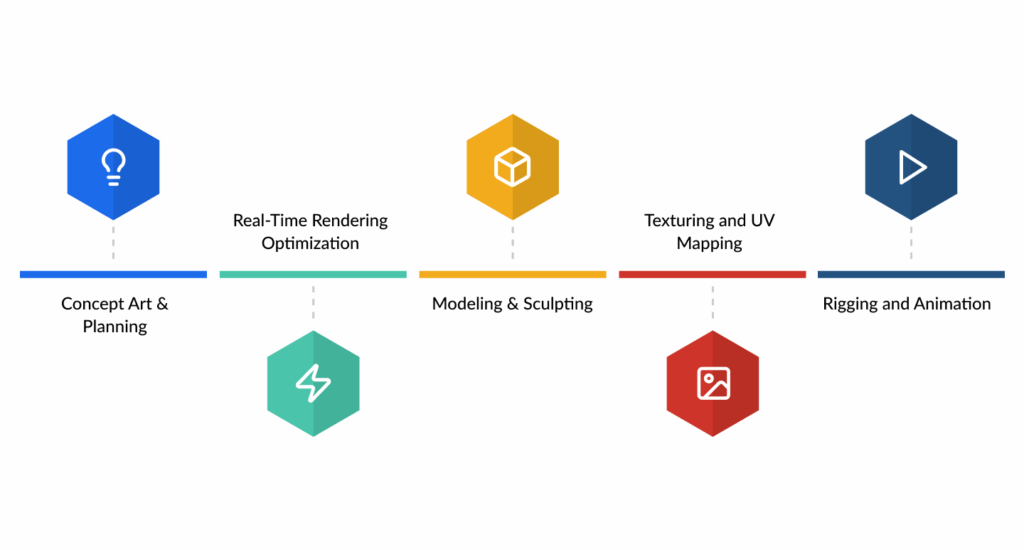

The process of bringing an immersive world to life starts way before rendering or animation. Any successful AR/VR 3D model begins as a carefully considered concept that proceeds through several stages of design, testing, and optimization. This path of precision and iteration is followed in whatever format the application is intended to run: VR headset or mobile AR. This process is how the professional world builds high-end 3D assets for AR and VR usage.

Concept Art & Planning

With a clear vision, every project begins. The concept art phase defines the looks, mood, and purpose of your 3D model. Artists work on initial designs, collect references, and set visual consistency so that the final model is consistent with the goals of the product or experience.

For AR and VR, this phase begins to define the user perspective and interaction. When viewing a VR asset up close, it requires more detail than an AR object from a distance. Planning for these parameters early saves from heavy redesigns later.

Successful teams also document scale, color palettes, and environment integration to ensure continuity between the real and virtual space.

Modeling & Sculpting

After approving the concepts, the next step involves bringing them to life. Artists in Blender, Maya, and ZBrush will start shaping the object’s geometry. In fact, this stage focuses on form, proportion, and topology—creating meshes that are clean and efficient for past animation and lighting.

The sculpting software can define details, such as wrinkles, engravings, or surface patterns. However, high polys will always be retopologized for optimum versions acceptable under the AR/VR engines. It is very critical to maintain the balance between details and performance for fast rendering under close-to-real conditions.

Texturing and UV Mapping

Textures are the life and soul of every model. Substance painter, Quixel Mixer, and the like help apply PBR materials, responding more naturally to light and environmental conditions.

Optimization of textures for AR/VR cases is necessary, usually using compressed formats and light maps baked to ensure satisfactory frame rates. Making sure that normal, metallic, and roughness maps are coherent will help conjure up believable surfaces without a GPU overload.

Rigging and Animation (If Applicable)

If the model requires motion—for a character, a robot arm, or an interactive object—it is now time for rigging. The skeleton is added by the artist for the controlled motion of limbs according to various inputs or physics. Once rigging is completed, animation is then applied using keyframes, motion capture, or procedurally in Unity or Unreal.

The smoothness of animation lends itself to realism and places the user within the experience, especially in scenarios involving continuous interaction like VR simulations and AR product demos.

Real-Time Rendering Optimization

Before integration, models will be prepared for the real-time performance. It entails polygon reduction, texture compression, adjustments for shaders, and lighting tests on all targeted devices.

Testing translates across every headset and phone so that the model retains performance and visual consistency across varied hardware.

Each poly tells a story, but the process gives life to this story!

Each polygon tells a story, yet the process provides it life.

AI-Powered 3D Modeling: The Next Frontier

From modeling to sculpting and texturing—a set of trades that could take days in the good old days—now can be performed within hours and sometimes minutes. Let it be clear, AI is not replacing artists; AI is simply enhancing their capability by automating mundane tasks and allowing the creators to focus on the heart of immersion—storytelling, vision, and refinement.

Generative AI Creating Assets on Autopilot

AI-based programs (Nvidia Omniverse, Runway, Kaedim, and OpenAI’s Shape-E) allow artists to enter specifications via text prompts, sketches, or reference images to generate 3D meshes directly. Rather than starting from scratch, the artist looks to evolve an AI-generated base model fit for their more encompassing vision. This grinds away the actual grind; the acceleration of ideation and prototyping is more assured, as AR/VR developers could pop into places AI-generated models, either as placeholders or actual assets, with tremendous concessions in the schedules without any in the arena of quality of work.

AI-Powered Texturing and Environment Building

AI goes beyond geometry to texture generation and environment design. Adobe Firefly and Substance AI Assist, for example, can produce convincing PBR-compatible materials in a matter of seconds. AI scene-building software can create an entire virtual world from simple prompts, generating scenery, vegetation, and props; interactivity in world-building has never been smoother.

Benefits and Artistic Control

AI helps optimize performance for consistency across any given device, thus making speed and cost-effectiveness possible. Yet artistic control must prevail, for it has the potential of many a time disconfirming the desired art direction and highly removing details. Thus, the greatest application thrives when artists learn to guide AI systems in the marriage of human instinct and machine accuracy.

The future of 3D modeling isn’t an automation affair—it’s about the collaboration of creativity and code.

Technical Challenges and Performance Optimization

Creating 3D models for AR and VR is a matter of technical constraints to counterbalance visual beauty. Immersive environments need accurate visual realism, real-time responsiveness, and compatibility with varied devices, all within stringent performance budgets. Drawbacks such as lagging, distorted display, or overheating can turn even the most beautiful assets into failures. By being cognizant of the difficulties involved and planning their optimization from the very onset of the development cycle, further smooth and consistent experiences are ensured for all users.

Managing High-Resolution Assets

Theoretically, models of high quality come with rather ridiculously high textures and damages, overwhelming-the-hardware situations. AR applications need assets that are lightweight and loaded instantly on mobile devices; VR heads, on the other hand, will have a smoother experience if rendering is optimized to meet the user’s comfort, which is 60°F-120°F.

In practice, developers lower polygon counts, bake complex lighting, and use texture atlases as a means of consolidating many materials into one. The balancing act is between responsiveness and realism: rich visuals without overpowering performance.

Cross-Platform Performance (Mobile vs PC VR)

Not all devices are the same, giving rise to the risk of having some conditions satisfied on a given device and other conditions dissatisfying on others. A model smoothly running on desktop VR might choke on smartphone-based AR. To punish the deviations, developers apply their magic to dynamic LOD scaling, texture compression, and real-time rendering.

Cross-platform testing is key to delivering a consistent user experience, whether that individual is walking through a museum exhibit on a high-end headset or previewing a product in AR on his phone.

Device Compatibility (Meta Quest, HoloLens, Smartphones)

Each AR/VR device has its requirements for the graphics pipeline and interaction framework. Meta Quest, for example, focuses on standalone performance settings; Microsoft HoloLens emphasizes environmental tracking; and smartphones depend extensively on camera and sensor data.

Optimization for each involves comprehending memory limits, shader constraints, and hardware texture unit capabilities to ensure models work correctly—irrespective of the ecosystem.

Testing & Quality Assurance

Prior to production, developers conduct extensive testing on each frame to stabilize frame rate and test texture streaming, lighting artifacts, and input responsiveness. Iteration, aided by tools for analytics and user testing, acts as an aid to identify weak points and performance bottlenecks. The 3D animations for immersive experiences are those where everything goes smoothly owing to the thousands of invisible refinements in technology.

Best Practices for High-Quality AR/VR 3D Models

Emphasizing the importance of creativity, even the most advanced tools and software for 3D modeling in AR and VR would fail without disciplining it. Building optimized 3D models for AR and VR consists of precision, efficiency, and storytelling: giving the assets a real appearance, loading it in real time, and immersively inserting them into those spaces. Here are some best practices used by well-known studios.

Optimize Meshes and Reduce Polygon Count

High polygon counts do not help people look better. A well-structured model can look detailed and render efficiently.Lighter assets will naturally reduce lag and camera drift. Henceforth, they sustain a good level of AR and VR performance.

Apply PBR Materials to Create Realistic Surfaces

PBR is officially the regular practice for achieving lifelike results. Basically, it emulates how light interacts with different surfaces such as metals, wood, or glass in real-world conditions. The combination of PBR with normal, roughness, and metallic maps creates extra depth and realism without adding unnecessary geometry.

Cross-Verify on Different Devices

Test the models on as many platforms as might be possible: Meta Quest and HTC Vive, smartphones, AR glasses, etc. Lighting, shadowing, and reflection work differently for different devices—the testing provides some assurance for stability and visual consistency across the board.

Build Modular Assets for Faster Scaling

Design reusable and combinable modular assets across projects. Using the modular tools and software for 3D modeling in AR and VR promotes better time management, keeps standards high in massive environments, and allows scaling at both small team and enterprise-wide pipeline levels.

Perfection in AR/VR is not minutes but design: breathing space that does not break flow.

Business Purposes & Gains from Investment in 3D Modeling

In terms of business consequences, apart from the visual and interactive experience, 3D modeling built for AR and VR is in reality turned into commercial dimensions. Thus, it increases sales conversions and lowers training costs. Every sector has proven the usefulness of immersive technology. Whenever companies place investment in well-optimized 3D assets, they are not just investing in aesthetics. They are in fact shaping engagement, education, and decision-making.

Enhancing Customer Engagement in Retail

Made possible through the creation of 3D models, retail and e-commerce are now transforming passive browsing into engaging, hands-on experiences. Brands use AR to visualize products so customers can see how their newly purchased furniture will match their view or how that watch looks on their wrist. These tactics build zillions of emotional confidence, keeping users involved and perked up as the uncertainty reduces. According to research conducted by Shopify, it found that AR-powered shopping experiences could increase conversion rates by as much as 94%. Reality drives revenue.

Streamlining Product Prototyping

For manufacturers, 3D models for virtual reality remove the need to build several physical prototypes worthy of judging whether a designed item will work. Instead, designers will build, test, and change virtual models in real time prior to a production-run start. This will save both time and materials and foster faster feedback loops among teams. By using design reviews based on VR, automakers such as Ford and BMW keep ergonomics and aesthetics under review while cutting millions annually in costs related to design iterations.

Enhancing Marketing via Immersive Experiences

Moving beyond traditional campaigns, AR and VR allow marketers to get closer to potential clients. They can imagine themselves walking into a virtual showroom or even exploring new property without needing to be physically there. 3D assets are bringing product demos to 3D animation for immersive experiences that fit a brand’s storytelling.

Training Simulations & Cost Savings

Replacement of physical training setups by VR simulations has been taking place in various industries such as healthcare, aviation, and manufacturing. Lifelike 3D environments will allow employees to practice high-risk procedures without injuring themselves or valuable equipment. This reduces training costs and increases confidence and accuracy, making a strong ROI multiplication.

Cost and Time Metrics in 3D Modeling for AR/VR

While building 3D models for AR and VR is quite imaginative, the other part includes budgeting and scheduling for the entire production process. There are several variables on which costs depend based on model complexity and purpose, and production scale impacts costs. It is necessary to know these parameters before planning for a business venture because they lead to unexpected overruns, better value for every produced asset, and effective planning.

Price Driver Controls

Price of 3D assets is based on several things, including the detail of the model, motion, character requirements, textures, and the level of interactivity.

- Complexity: Simple, static items may cost a few hundred dollars, while anything more intricate—a high-poly animated character for VR—could theoretically cost countless thousands of dollars.

- Team Expertise: Cost goes up with experienced modeling, texturing, and animation being brought in, yet the end product will speak for itself in quality and effectiveness.

- Software and Licensing: Licenses are paid for packages, whether they are commercial, such as Autodesk Maya or ZBrush, or open source, while open-source software, such as Blender AR VR modeling, will significantly reduce expenses but requires a great deal of experience to get effective usage on a level to deliver the results.

Outsourcing also can affect pricing—studios in Eastern Europe or Asia often produce high-quality work for competitive prices compared to their Western counterparts.

Timeframes for Small Projects vs. Big Project

The scale of the asset determines the timeframes.

- Simple Models: Low-poly AR assets such as products for e-commerce visualization could take anything from 1 to 3 days.

- Mid-Level Models: Characters or props for interactive VR scenes usually take 1-2 weeks, including texturing and optimization.

- Complex Scenes: For fully immersive environments or animated simulations, a timeframe of 4–8 weeks is necessary, especially if rigging, lighting, and testing are involved.

Buffer time for the changes and quality assurance will ease the delivery process and better performance.

Comparisons of In-House and Outsourced Budget

The choice of in-house versus outsourced 3D modeling much depends on resources and frequency of assignments.

- In-House Teams: Little control over the process and faster iteration but initial investments in talent, hardware, and software.

- Outsourced Studios: Reduce long-term costs, provide access to specialized skills, and are best for one-off or larger AR/VR projects.

- Best Cost-Performance Hybrid Model: In-house teams develop creative direction and outsource production-heavy tasks.

Time is a cost in AR and VR, and effective efficiency is the artful spending of it.

Future Trends in 3D Modeling for AR/VR

The novelties for AR/VR in 3D modeling come faster than ever. From AI-driven creation to cloud rendering and WebAR, it is giving new meaning to the designing and delivering of immersive experiences.

Real-Time Cloud Rendering

Cloud rendering allows the artist to process heavy and complex 3D scenes instantly without much dependency on heavy machines locally. This sort of collaboration is now fast and can be scaled through platforms like Nvidia CloudXR and AWS Nimble Studio, allowing teams to preview immersive environments in real time and from anywhere.

Photogrammetry & LiDAR Integration

Photogrammetry and LiDAR scanning are true game changers in terms of realism. They help capture the real-world objects and spaces to build exact 3D replicas for AR/VR environments.

WebAR and WebVR

Immersive content is no longer locked away inside the bounds of apps. With the implementation of WebAR and WebVR, users are being facilitated to surf through 3D experiences from within a browser. This is proving to be the utmost means for the simple commercialization and engagement of AR/VR, ranging from virtual product demos to digital showrooms.

AI & Machine Learning

The AI model assigns optimization functions to almost everything, starting from texture generation to real-time performance retuning. Such systems compress production hours, increase visual consistency, and allow for more intelligent asset management to get artists spending more time creatively and less pondering on technical complications.

Virtual Futures: Creating from the 3D Landscape

With AR and VR changing how people communicate, learn, and create, 3D modeling is the force in turning digital imagination into living experiences. Today 3D modeling lifeworlds exist; once thought to be a specialized skill, tomorrow it will be the basis of interaction design, where creativity, precision, emotion, and engineering collide.

To be a 3D modeler for AR/VR is to learn to affect how people feel in digital spaces. To build worlds that react, objects that respond, and visuals that convince the mind of their existence. Every polygon, texture, and reflection comes together for an experience that goes beyond the screen.

With fast advancements in the development of AI-assisted 3D modeling tools, along with the progress made in real-time rendering and spatial computing, the creation barrier is fast perishing. The heaven of the future stands for those creators who entertain the balance between artistry and optimization—who not just design visuals but bring immersion itself.

So go ahead, step out and challenge yourself, and start building realities that will make an impact with Esferasoft. To learn more, call now at +91 772-3000-038!

FAQ’s

1. What is 3D modeling for AR and VR applications?

It is the generation of digital objects, environments, and characters that are supposed to exist in the augmented or virtual spaces. These models will form the visual/interactive base of immersive experiences.

2. What is so important about 3D modeling in developing immersive experiences in AR/VR?

Realism drives immersion, meaning that well-optimized 3D-models would render digital interactions naturally, believably, and emotionally engaging, which is vital in the retention and satisfaction of users.

3. Which industries are using more AR/VR-based 3D modeling?

Gaming, healthcare, real estate, commerce, and education are the leading adopters of these technologies. They use 3D assets to design visual representations of ideas, training, and performance with interactive engagement.

4. What are the best file formats for 3D models regarding AR and VR projects?

Typically, FBX, GLTF/GLB, OBJ, and USDZ formats are used, depending on the compatibility of a specific engine or platform. This ensures smooth integration and high performance.

5. How much time does it take for a 3D model of high quality to be created for an AR/VR project?

Simple assets might take a couple of days to create, while complex, animated, and photorealistic ones might take several weeks to perfect alongside testing and optimization.

6. What is the best software for 3D modeling for AR/VR development?

Choices include Blender, Autodesk Maya, ZBrush, Substance Painter, and game engines such as Unity or Unreal Engine for output.

7. What can I do to make my 3D models better suited for AR/VR applications?

Using low-poly meshes and compressed textures, LOD scales, and baked lighting is the trigger for optimization of on-the-fly rendering and lifetime of device batteries.

8. What skills should one possess to start 3D modeling for AR and VR?

Knowledge of spatial awareness, texturing, lighting, animation, and familiarity with software such as Blender or Maya as stand-alone tools, and game engines for deployment.

9. Do AI-based tools help in speeding up the 3D modeling process in AR/VR?

Of course. With help from Kaedim, Nvidia Omniverse, and Runway, users can now automate the 3D modeling, texturing, and optimizing processes using AI to offer exciting and immediate production time reductions.